A Customer-Focused Approach to Tooling

One year after Etsy's Frontend Engineering Group successfully swapped JavaScript Unit Testing tools, it was time to tackle another thorny area of our testing stack: End-To-End Testing. For those new to this practice, End-To-End Testing in web development is meant to simulate an actual user exercising a website. The testing tool launches a real browser, loads the real site, accesses real data ... you get the idea. This makes End-To-End Testing both extremely powerful and extremely fickle. A lot more could be said about the tradeoffs of this form of testing, but that's not what we want to focus on today.

End-To-End Testing had a bad rap at Etsy. Engineers avoided touching that suite unless absolutely necessary. Enter the Testing Platform team. We were responsible for maintaining Etsy's testing tools and coaching the rest of Engineering on effective testing practices. All of the sidelong glances and offhand comments told us End-To-End Testing was an area for improvement. When we finally got the green light to work on it we jumped at the opportunity to do ... something.

We had an issue: we didn't know what problems engineers actually faced. Our team worked in the segment of Engineering traditionally known as Infrastructure. We were tasked with supporting the tools, but we were not the ones writing the tests. We could offer our own theories about what made End-To-End Testing difficult, but they were at best educated guesses. If we wanted to really address the issue we needed to engage our customers: the Product Teams.

Donning our Product Manager Hats

Let's take a moment to talk about the structure of the Testing Platform team and Etsy in general. While Etsy employed a host of Product Managers to help the Product Teams who build user-facing features, they had often not been embedded on the teams working within the Infrastructure space. Testing Platform doesn’t have a Product Manager, making it an additional effort to engage with them, but pushing through that challenge was definitely the right thing to do. If you're similarly apprehensive I hope this story of our work—as imperfect as it was—will give you the confidence to try it for yourself.

Understanding the Problem

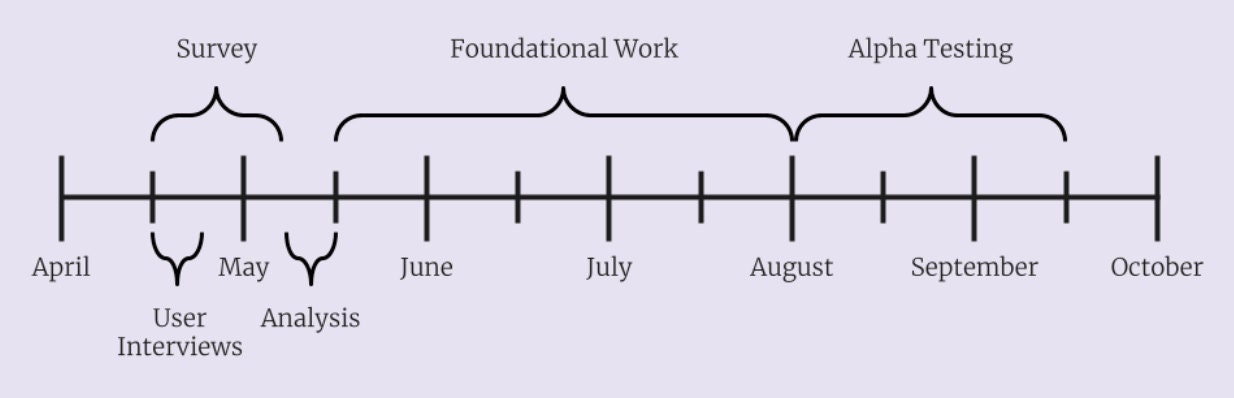

The first step on our journey was to connect with the people who had used our existing tooling. We combed through our Git logs to find anyone who had written a new End-To-End Test or edited an existing one in the prior 18 months. This ensured we heard from folks with fresh experience wrestling with this unruly beast. One-by-one we reached out to them to conduct User Interviews.

Our team used the term "User Interview" pretty fast and loose. In practice, the entire process was conducted via Slack direct messages. A member of our team reached out to each engineer and struck up a conversation. It began by introducing ourselves and the project, explaining how we had identified them as someone to contact, and asking about their experience writing or editing tests. What followed was an exercise in empathetic listening. We heard their stories and asked probing questions to ensure we understood the issues they encountered. In most cases we were done in less than 30 minutes.

Importantly, we also asked everyone to rate their testing experience from 1 to 10 so that we had a quantifiable baseline measure. The average rating was 3.4 out of 10. Looking at each rating individually it was clear: using our existing tooling was universally considered a negative experience.

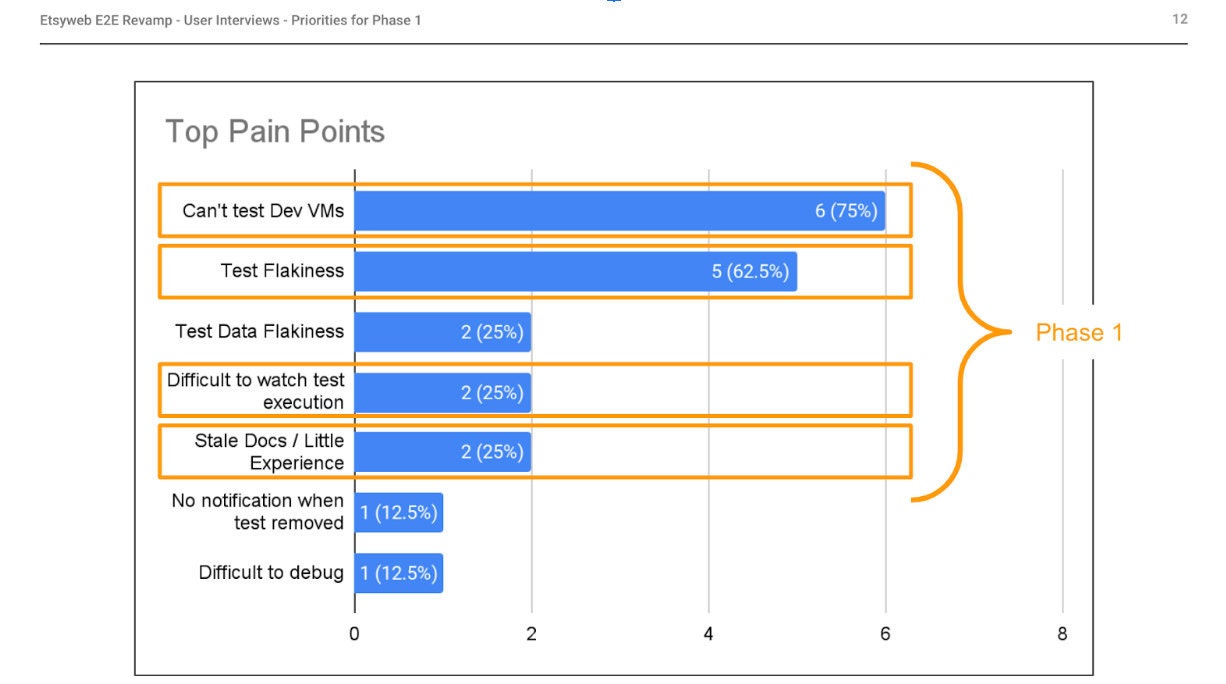

While the interviews were simple, the insights we garnered were invaluable. First and foremost, we learned engineers wanted tooling that could run tests against the virtual machines Etsy uses as its development environments. Feature code and test code are developed and deployed hand-in-hand at Etsy. The inability to execute new tests against environments running new code meant engineers could easily introduce a broken test without realizing it, halting our deployment process.

Secondly, we learned that some engineers had seen tests fail even when there was no bug present in the application. This "flakiness" was not altogether unexpected. Within Software Quality Assurance circles, End-To-End Testing is notorious for its instability. Engineers make careers out of learning to write End-To-End Suites that minimize the toil of constantly fixing tests. What caught our attention though was the pervasiveness of this issue. More than half of the developers we interviewed cited flakiness as a main issue, and in retrospect it was easy to understand why. The folks writing these tests at Etsy were not dedicating their lives to mastering End-To-End Testing. It was just one more tool in their toolbox—a really difficult, unpleasant tool. They needed something that worked for them.

Going into this work we weren't sure what the outcome would be, whether we would enhance the current tooling or replace it altogether. A complete overhaul of any system should not be taken lightly, and fixing the top pain point alone would be considered a win. Based on what we had learned though, a brand new tool seemed warranted. We decided to tackle both of those pain points—along with a couple more low-hanging fruit—and set off to find a new End-To-End Testing tool.

Surveying the Landscape

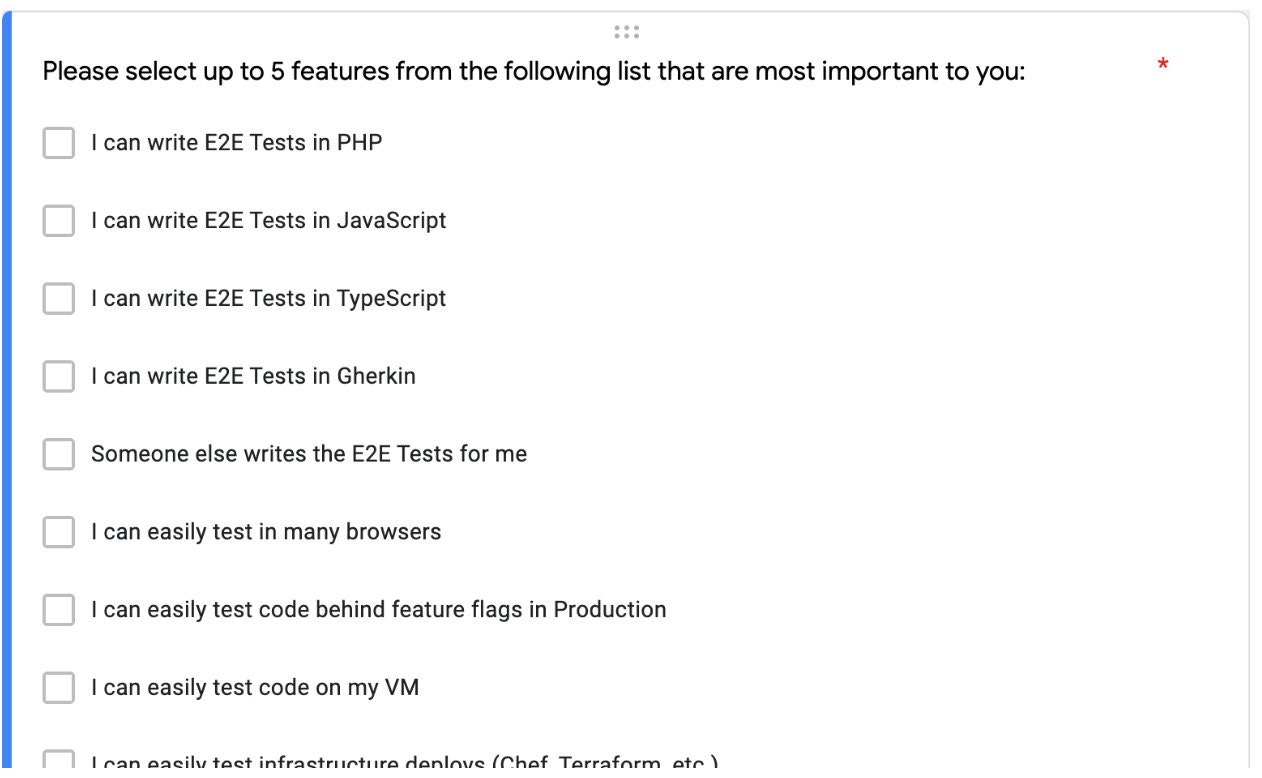

Having made the decision to look for a new tool, the next task for the Testing Platform team was to sift through a broad range of available options. In order to narrow our focus we once again turned to our customers for guidance. This time we broadened our selection criteria to anyone who might use the tooling in the future. We wanted them to tell us what features were must-haves. Since a lot of engineers met the new criteria, we put together a survey with a few simple questions that we could send to everyone at once. We asked them:

To select the top five most important features from a list we put together. Write-ins were permitted. If they recommended any particular tool. If we could follow up with them again later during our investigation.

From the engineers we surveyed, we gleaned a few key pieces of information:

- While Etsy's frontend is written with a mix of PHP and JavaScript, the majority of respondents did not show a language preference for one or the other.

- Providing some method of visual test debugging was critical.

- Echoing the User Interviews, the ability to run tests against Development Environments was absolutely vital.

What's more, Testing Platform was able to source two promising tools directly from survey responses. Both could potentially reduce flaky tests thanks to baked-in Actionability Checks meant to prevent race conditions.

Vetting our Options

With our two candidates selected, the Testing Platform team needed to figure out which one best suited Etsy's needs. As you've probably guessed, we turned to our customers for a third and final time. We reached out to the group survey respondents (who were open to additional follow up) and invited them to alpha test both proposed solutions. Five engineers were generous enough to offer their services.

While sourcing volunteers, our team laid the foundation for writing tests with both frameworks and educated ourselves in their basic usage. We then met one-on-one with each Alpha Tester to introduce them to both frameworks in a 2-hour pairing session. After that we turned them loose to write their own, non-trivial tests in both tools. We allotted six weeks for the Alpha Testing phase to give the participants time to explore the tools while working around their regular job duties. Afterward we met with them again to hear about their experience. Similar to our original User Interviews, we simply chatted with the Alpha Testers about the process of writing tests. We also asked them to rate their experience with each tool from 1 to 10. The results were fairly similar between each solution with an overall neutral impression.

This gave our team confidence that both options were viable, so it fell on us to make the final decision. For this we turned back to the pain points we uncovered in our User Interviews. During the Alpha Testing phase, the tests from one of the proposed solutions behaved consistently and provided actionable error messages when a failure did occur, making it the clear choice to reduce test flakiness.

Product Managed

When everything was said and done, we were able to set up a new End-To-End Testing tool with confidence because we had utilized a few key user engagement practices:

- User Interviews helped us clarify the problem and success criteria.

- Surveying Engineering made sourcing replacements a grassroots effort rather than a top-down decision.

- Alpha Testing potential replacements built confidence that they would hold up to real-world usage.

Having the foresight to gather quantitative metrics also made selling the decision to leadership a snap. We could definitively say how our work had impacted developer satisfaction. These insights weren't free of course. In total we spent about 10 weeks engaging our customers, but the results were well worth it.

Testing Platform is not the only team seeing success from this mode of work either. Etsy is re-envisioning our Infrastructure teams as Enablement teams, focusing beyond simply maintaining infrastructure to comprehensively supporting other engineers. This even includes hiring Product Managers directly onto some of these teams.

Whether your company is ready to make that leap or not, remember that any team—even any individual—can follow this path. The Testing Platform team set out to really listen to our customers. If you do the same and hold their needs as your north star, then you're bound to find your way.