Scaling Etsy Payments with Vitess: Part 1 – The Data Model

At the end of 2020, Etsy’s Payments databases were in urgent need of scaling. Specifically, two of our databases were no longer vertically scalable — they were using the highest resource tier offered on the Google Cloud platform. These databases were crucial to our day-to-day payment processing, so it was a high-risk situation: spikes in traffic could have led to performance issues or even loss of transactions. Our sellers depend on our payments system to get paid for their hard work, making this reliability effort even more critical. A stable and scalable payments platform provides the best possible experience for our sellers.

Between Dec 2020 and May 2022, the Etsy Payments Platform, Database Reliability Engineering and Data Access Platform teams moved 23 tables, totaling over 40 billion rows, from four unsharded payments databases into a single sharded environment managed by Vitess. While Vitess was already managing our database infrastructure, this was our first usage of vindexes for sharding our data.

We did this work in two phases. First we migrated our seller ledger infrastructure, a contained domain that determines seller bills and payouts. For the second phase, we worked to reduce load on our primary payments database, which houses transaction data, payment data and much more. This database has been around for over a decade and hosted nearly 90 tables before the migration. To cut down the scope of the project we strategically chose to migrate just a subset of tables with the highest query volume, adding in others related to those high-volume tables as needed. In the end, even operating on just that subset, we were able to reduce load by 60%, giving us room to scale for many years down the road.

Throughout this project, we encountered challenges across the stack. This is the first in a series of posts in which we’ll share how we approached those challenges, both in application and infrastructure. Here we’ll be focusing on making application data models available for sharding. In part 2, we’ll discuss what it takes to cut over a crucial high-traffic system, and part 3 will go into detail about reducing the risks that emerge during the cutover.

An Ideal Sharding Model: Migrating the Ledger

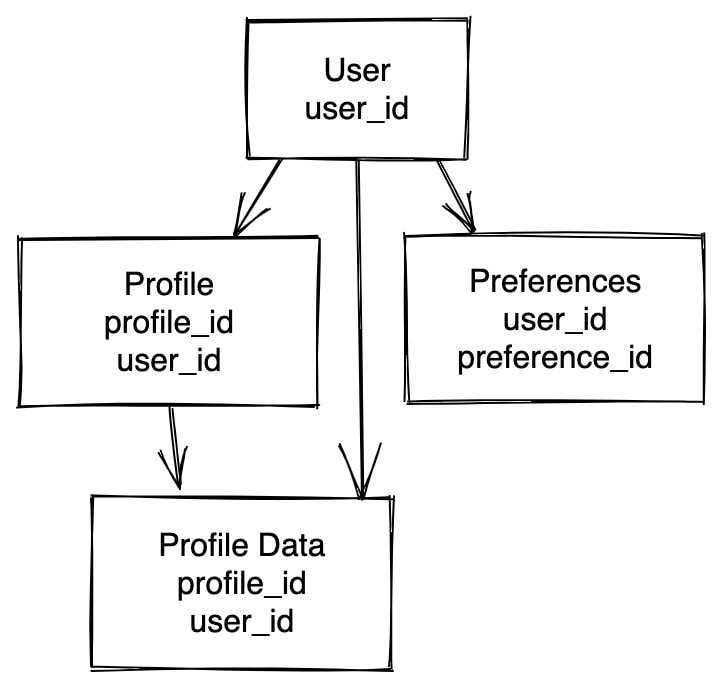

A data model’s shape impacts how easy it is to shard, and its resiliency when sharded. Data models that are ideal for sharding are shallow, with a single root entity that all other entities reference via foreign key. For example, here is a generic data model for a system with Users, and some data directly related to Users:

This type of data model allows all tables in the domain to share a shardifier (in this example, user_id), meaning that related records are colocated. Even with Vitess handling them, cross-shard operations can be inefficient and difficult to reason about; colocated data makes it possible for operations to take place on a single shard. Colocation can also reduce how many shards a given request depends on, mitigating user impacts from a shard going down or having performance issues.

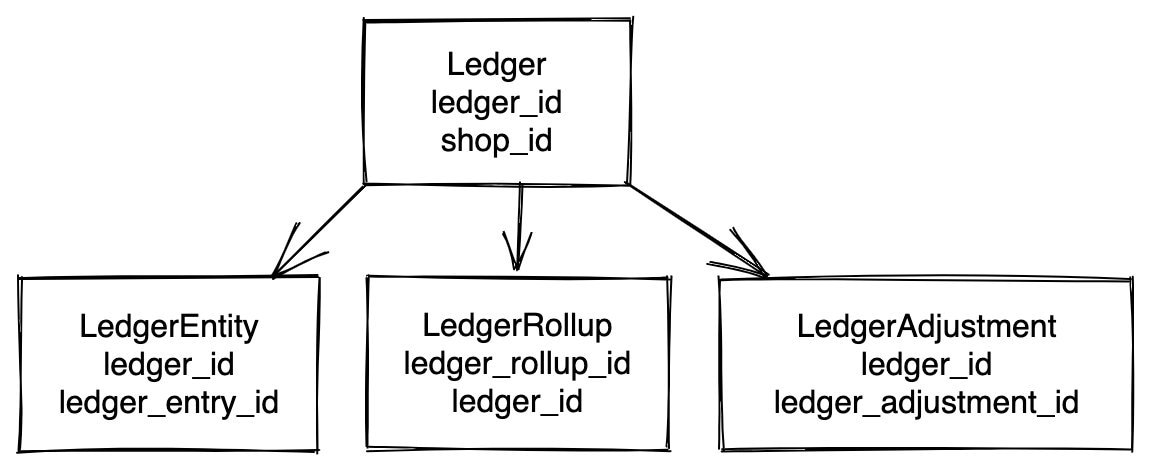

Etsy's payments ledger, our first sharding migration, was close to this ideal shape. Each Etsy shop has a ledger of payments activity, and all entities in this domain could be related to a single root Ledger entity.

The business rule that maintains one ledger to a shop means that ledger_id and shop_id would both be good candidates for a shardifier. Both would keep all shop-related data on a single shard, isolating shard outages to a minimal number of shops. We went with shop_id because it's already in use as a shardifier elsewhere at Etsy, and we wanted to stay consistent. It also future-proofs us in case we ever want to allow a shop to have multiple ledgers.

This "ideal" model may have been conceptually simple, but migrating it was no small task. We needed to add a shop_id column to tables and to modify constraints such as primary keys, unique keys, and other indexes, all while the database was resource-constrained. Then we had to backfill values to billions of existing rows, while–again–the database was resource-constrained. (We came up with a real-time tunable script that could backfill at up to 60x faster using INSERT … ON DUPLICATE KEY UPDATE statements.) And when we had our new shardifier in place there were hundreds of existing queries to update with it, so Vitess would know how to route to the appropriate shard, and hundreds of tests whose test fixture data had to be updated.

Non-ideal Models: Sharding Payments

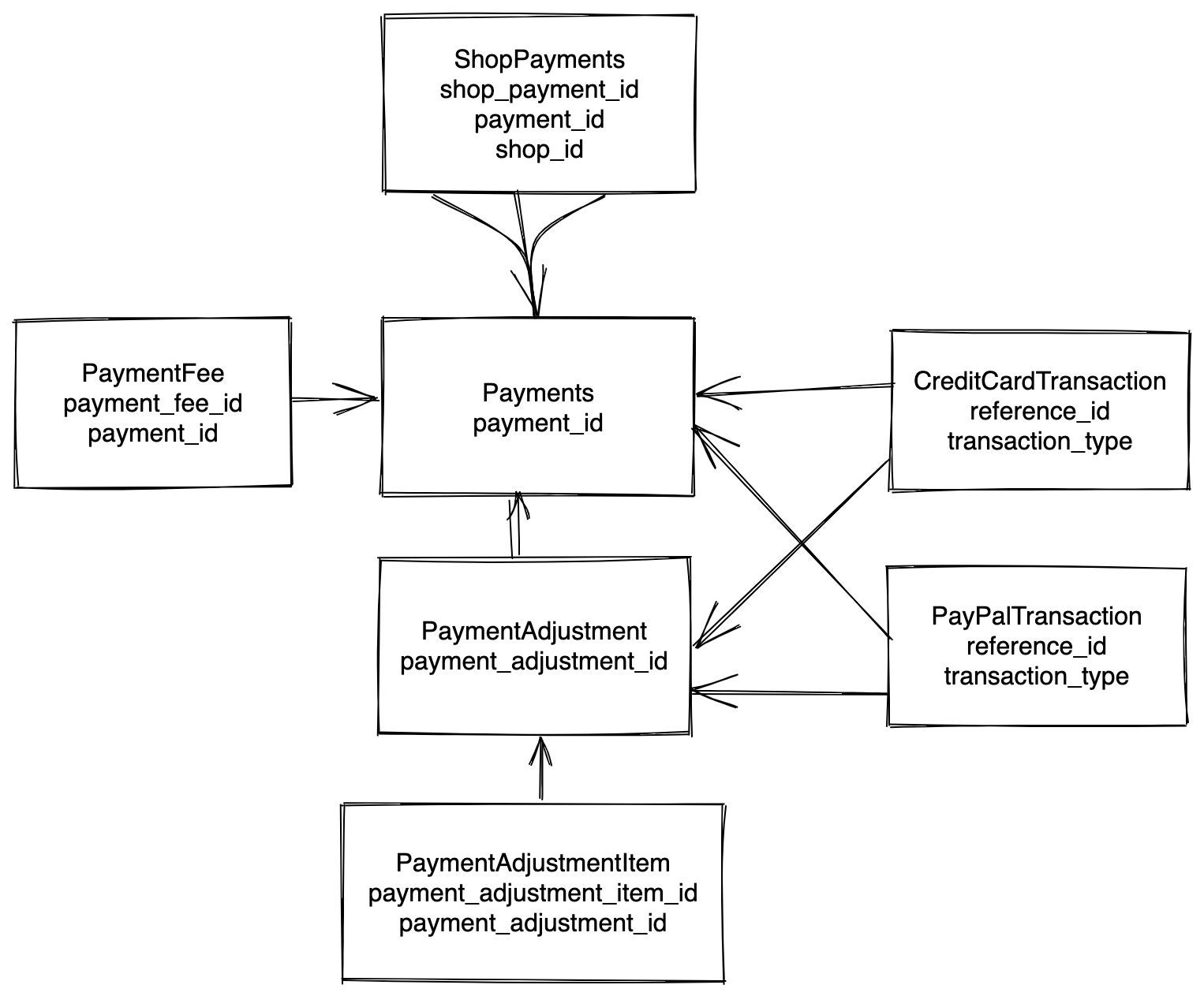

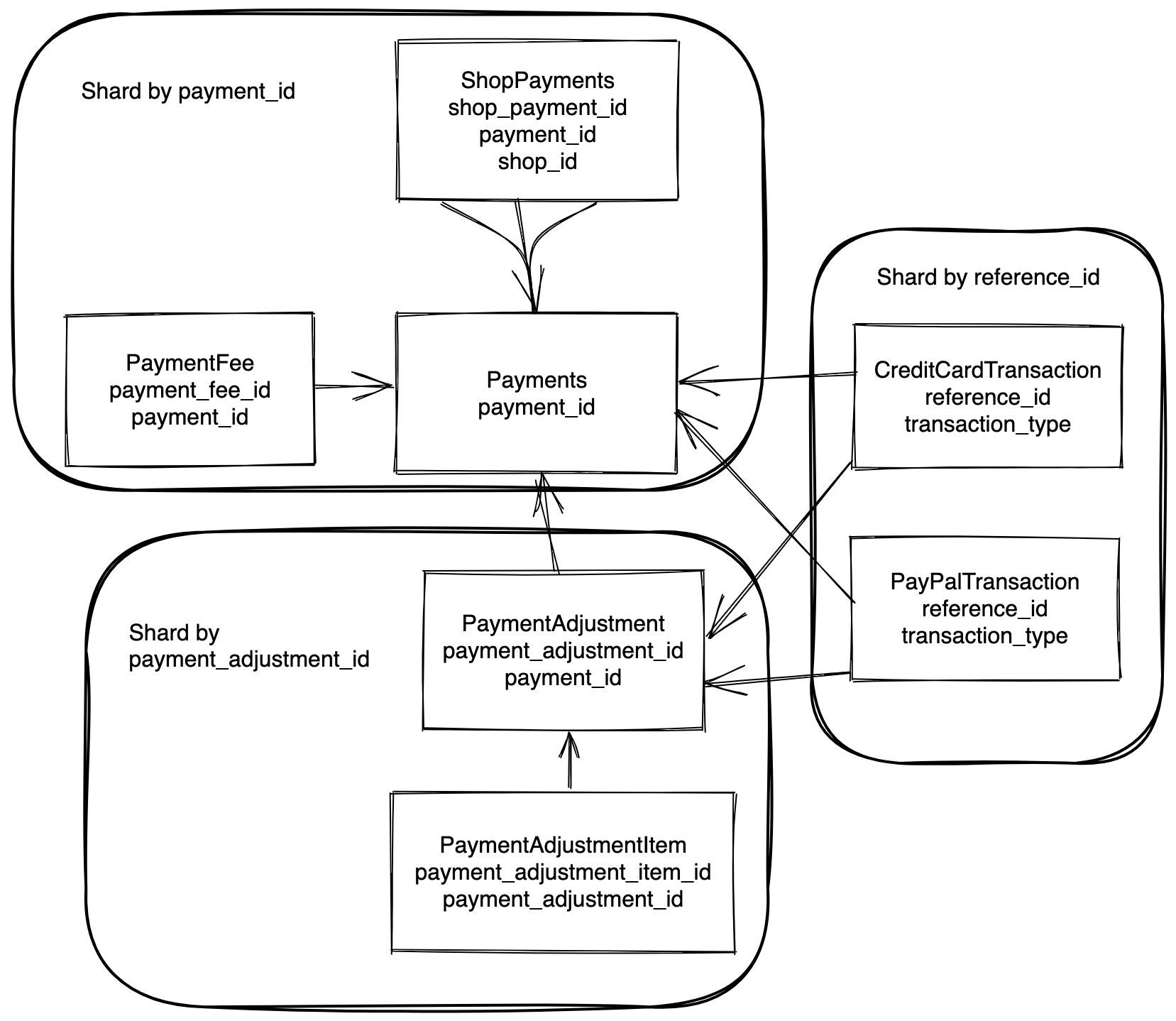

For our second phase of work, which would reduce load on our primary payments database, the data model was less straightforward. The tables we were migrating have grown and evolved over a decade-plus of changing requirements, new features, changes in technology and tight deadlines. As such, the data model was not a simple hierarchy that could lend itself to a standard Etsy sharding scheme. Here’s a simplified diagram of the payments model:

Each purchase can relate to multiple shops or other entities. Payments and PaymentAdjustments are related to multiple types of transactions, CreditCardTransactions and PayPalTransations. Payments are also related to many ShopPayments with different shop_ids, so sharding on that familiar Etsy key would spread data related to a single payment across many shards. PaymentAdjustments meanwhile are related to Payment by payment_id, and to the two transaction types by reference_id (which maps to payment_adjustment_id).

This is a much more complex case than the Ledger model, and to handle it we considered two approaches, described below. As with any technical decision, there were tradeoffs to be negotiated. To evaluate our options, we spiked out changes, diagrammed existing and possible data models, and dug into production to understand how existing queries were using the data.

Option 1: Remodel the core

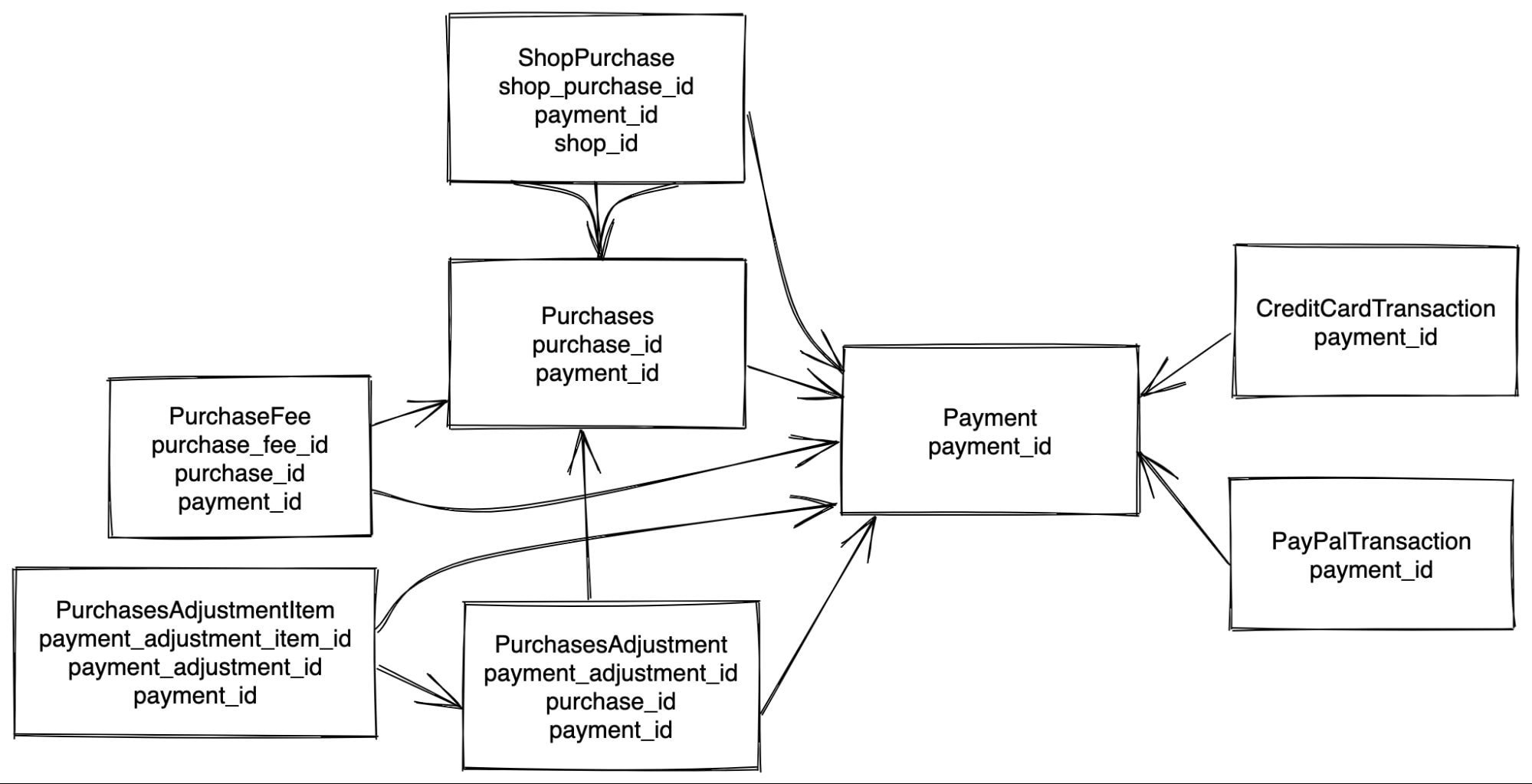

The first approach we considered was to modify the structure of our data model with sharding in mind. The simplified diagram below illustrates the approach:

We’ve renamed our existing “Payment” models “Purchase,” to better reflect what they represent in the system. We’ve created a new entity called Payment that groups together all entities related to any kind of payment that happens on Etsy. With this model we move closer to an “ideal” sharding model, where all records related to a single payment live on the same shard. We can shard everything by payment_id and enable colocation of all these related entities, with the attendant benefits of resilience and predictability that we've already noted.

Introducing a consequential change to an important data model is costly. It would require sweeping changes to core application models and business logic and engineers would have to learn the new model. Etsy Payments is a large and complex codebase, and integrating it with this a new built-to-shard model would lead to a scope of work well beyond our goal of scaling the database.

Option 2: Find shardifiers where they live

The second approach was to shard smaller hierarchies using primary and foreign keys already in our data model, as illustrated below:

Here we’ve sharded sub-hierarchies in the data together, using reference_id as a polymorphic shardifier in the transaction model so we can collocate transactions with their related Payment or PaymentAdjustment entities. (The downside of this approach is that PaymentAdjustments are also related to Payments, and those two models will not be colocated.)

Considering how urgent it was that we move to a scalable infrastructure, and the importance of keeping Etsy Payments reliable in the meantime, this more modest approach is the one we opted for. As discussed above, most of the effort in the Ledger phase of the project went towards adding columns, modifying primary keys to existing data tables, backfilling data, and modifying queries to add a new shardifier. In contrast, taking established primary and foreign keys as shardifiers whenever possible would cut out nearly all of that effort from the Payments work, giving us a much shorter timeline to completion while still achieving horizontal scalability.

Without having to manage the transition to a new data model, we could focus on scaling with Vitess. As it happens, Vitess has re-sharding features that give us flexibility to change our shard design in future, if we see the need; sharding on the basis of the legacy payments model was not a once-and-forever decision. Vitess can even overcome some of the limitations of our "non-ideal" data model using features such as secondary indexes: lookup tables that Vitess maintains to allow shard targeting even when the shardifier is not in a query.

This was part 1 of our series on Sharding Payments with Vitess. We'll have a lot more to say about our real-world experience of working with Vitess in part 2 and part 3 of this series. Please join us for those discussions.