Building a Platform for Serving Recommendations at Etsy

With a catalog of more than 100 million magical listings, Etsy extensively relies on software infrastructure and machine learning (ML) to recommend the right items to our buyers at the right time.

In this post, I’ll cover how our recommendations architecture has evolved over the years, and how we designed a platform that lets Etsy product teams use a variety of ML building blocks to offer our users personalized and real-time recommendations.

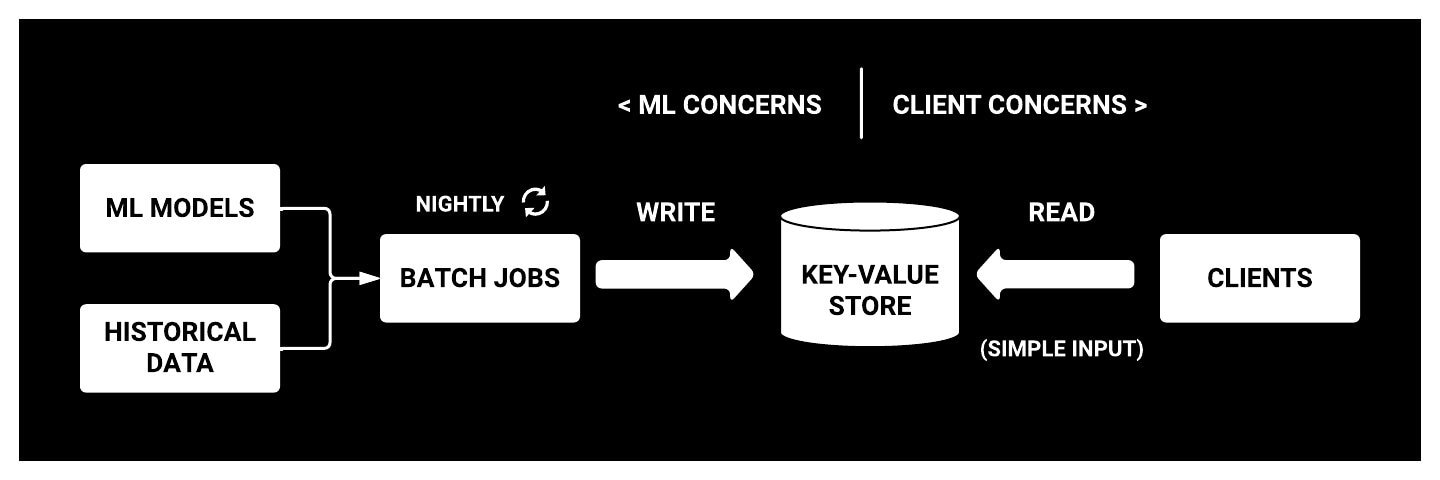

Batch architecture (aka the simple way)

Let’s start with some background. When Etsy began offering recommendations three years ago, we pre-computed them statically via an offline process.

A set of batch jobs, running nightly, populated a key-value store with pre-ranked recommendations, generated by machine-learning models and based on historical data. For a given listing ID, as the key, another set of listing IDs was generated to serve as the value (the associated recommendations).

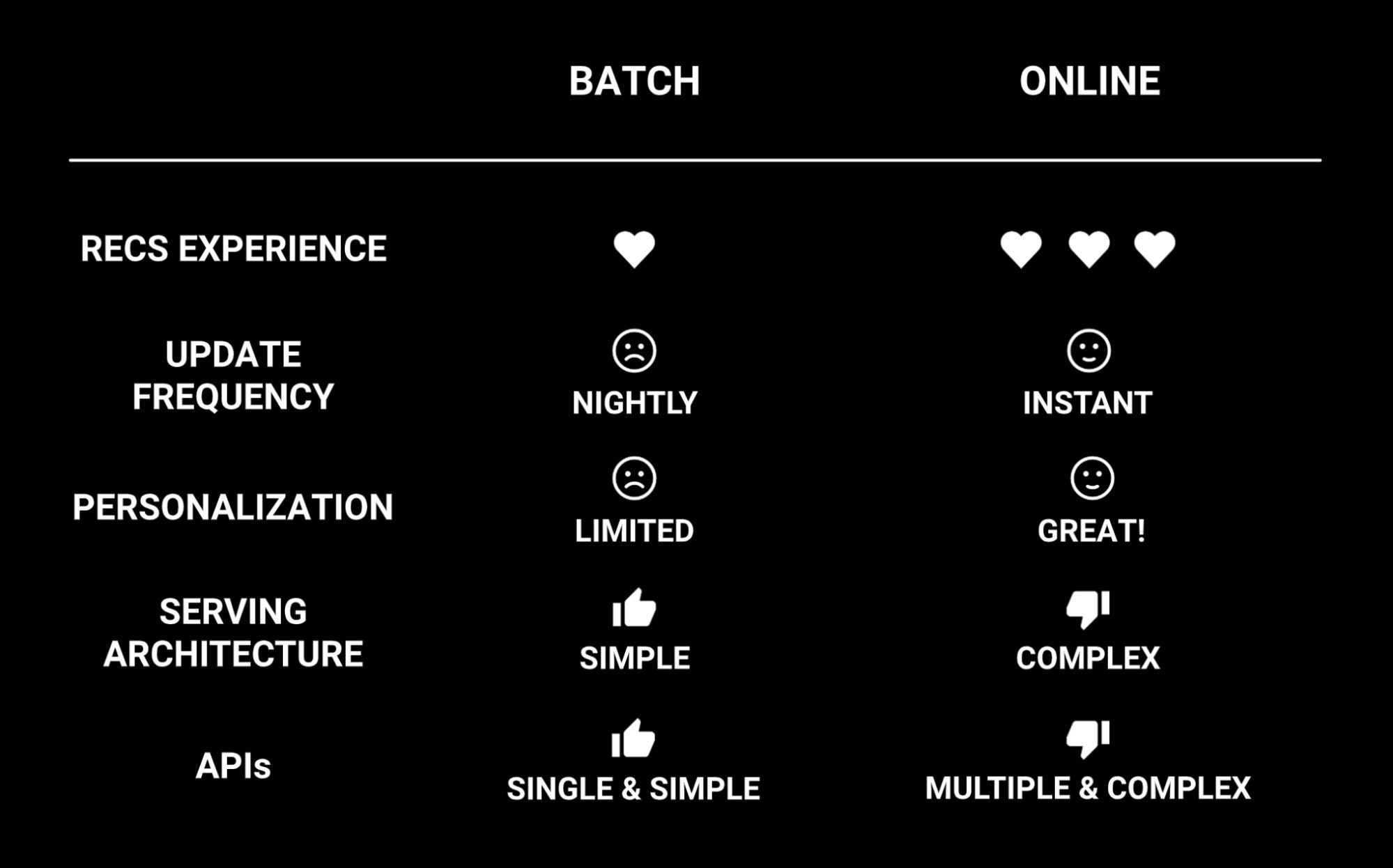

From a serving architecture perspective, this was an elegantly simple solution. Our key-value store kept ML and client concerns nicely separated, and it kept request-time latency constant and low.

However, since recommendations were only generated once a day, from historical rather than live data, we had limited opportunities for creating dynamic and personalized user experiences. We envisioned, for instance, recommendation modules that could adapt to a user’s browsing journey in real-time, while they were still browsing, but our batch architecture made that a difficult proposition.

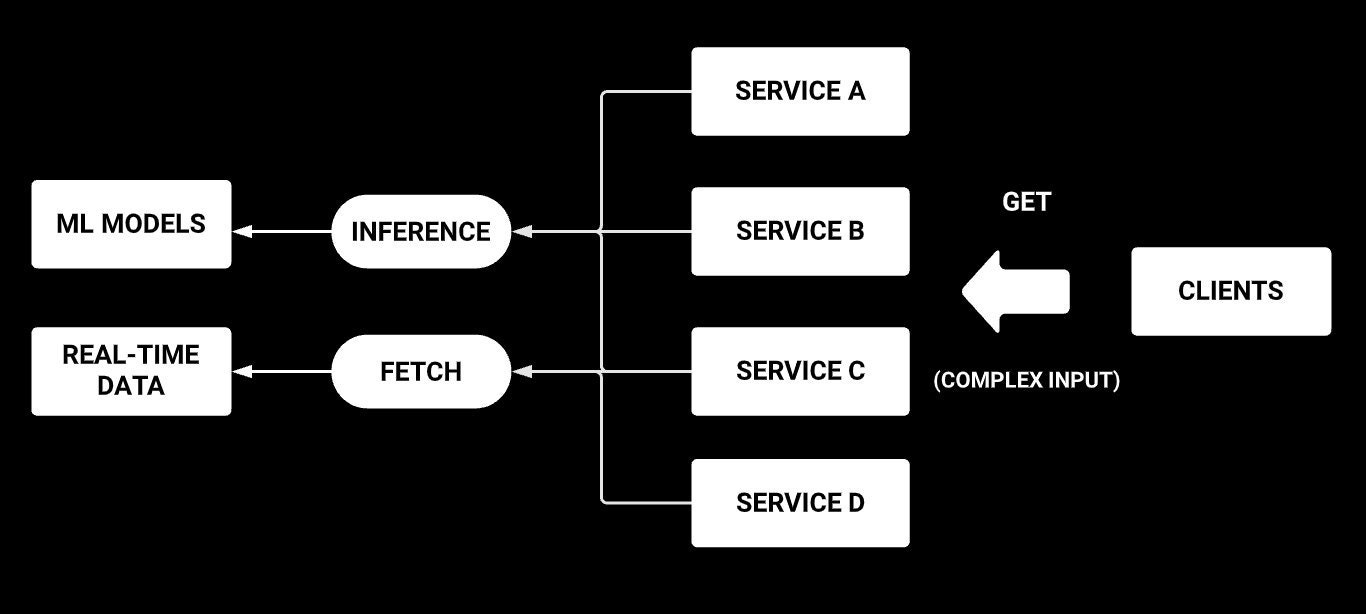

Online architecture (aka the great but hard way)

To offer personalized and real-time recommendations to our buyers, we explored opportunities to incorporate session data into our systems, while continuing to take into account user data and privacy choices.

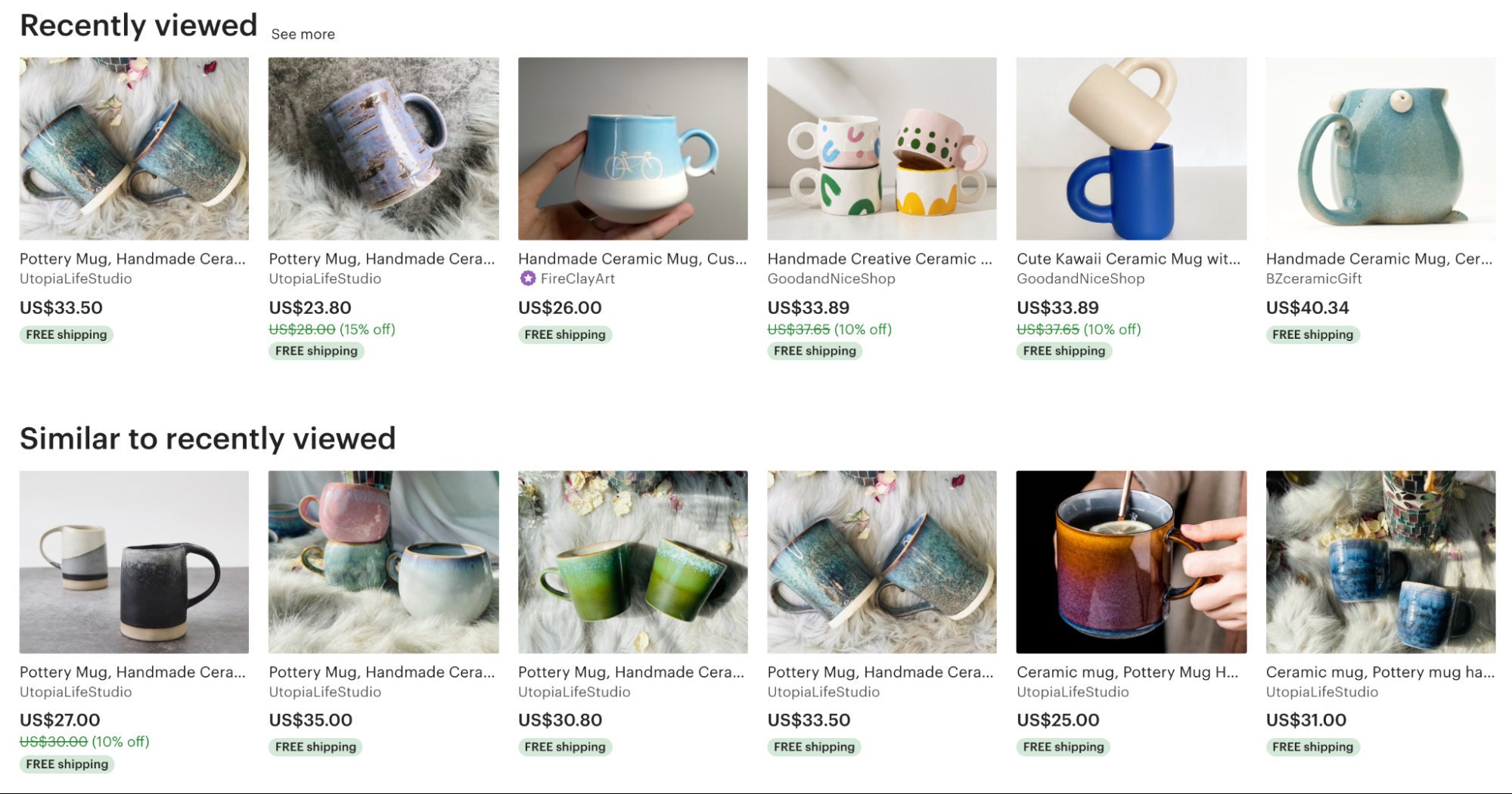

Eventually, we decided to experiment with an online architecture that allowed for creating new personalized user experiences. For example, it would let us look at the search query that had brought a user to a given listing so that we could derive a meaningful set of further items to show them. We could also take a user’s recently viewed items and make deductions about where they were likely to want to go next: recommending mugs, for instance, to someone beginning the hunt for a new favorite cup.

To make the shift from our batch to an online architecture, we built services that accept complex input (as opposed to simple input, like an item id), fetch real-time data (as opposed to historical data only) and make ML predictions on demand (as opposed to in nightly batches). Depending on the use case, these services can be called at request time or asynchronously.

Of course, building a service-oriented architecture like this comes with tradeoffs. We gain in dynamism, but lose the clean and clear separation of machine-learning and client concerns. The system becomes more complex to code against, potentially more difficult to reason about, and the serving architecture underlying it undergoes a matching increase in complexity.

We found that we faced two key challenges:

- Keeping latency and error rate low. Caching, compression, and inference in small concurrent batches helped, but none of those approaches can scale to the infinite, and we found that good tooling and solid observability were essential.

- Keeping APIs simple. The growing number of services combined with complex inputs made it hard to maintain a simple and consistent API. The various bespoke APIs we built around these services allowed us to generate great recommendations, but adding them also generated increasing technical debt.

A platform approach (aka the scalable way)

Switching to an online architecture unlocked a range of opportunities for new user experiences. Product teams were so eager to integrate the system that we knew we needed to make it scalable as soon as possible.

At Etsy, we value continuous learning and we want to empower teams to experiment. The overwhelming number of requests the Recs team was receiving, combined with our experience of the technical challenges of our online architecture, motivated us to move from a demand model–vesting responsibility for creating new recommendations in a single team–to an enablement model. We decided that the way forward was to invest in building a recommendations platform. We would give our product teams a set of usable tools to create recommendation modules for themselves, and set up an infrastructure to move their work to production with minimal friction.

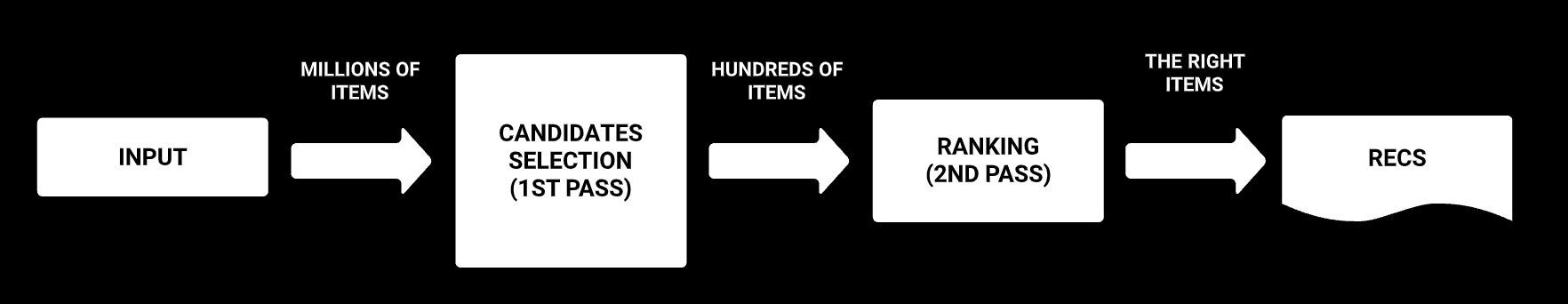

Generating personalized recommendations from a catalog of 100 million listings within an acceptable latency budget and with a reasonable amount of hardware is not an easy task. To reduce our overhead, we often approach recommendations as a two-pass process: a candidate selection pass first winnows the whole catalog to a few hundred most relevant items; and then a ranking pass orders those first-pass results to decide what the right items are for this user at this moment.

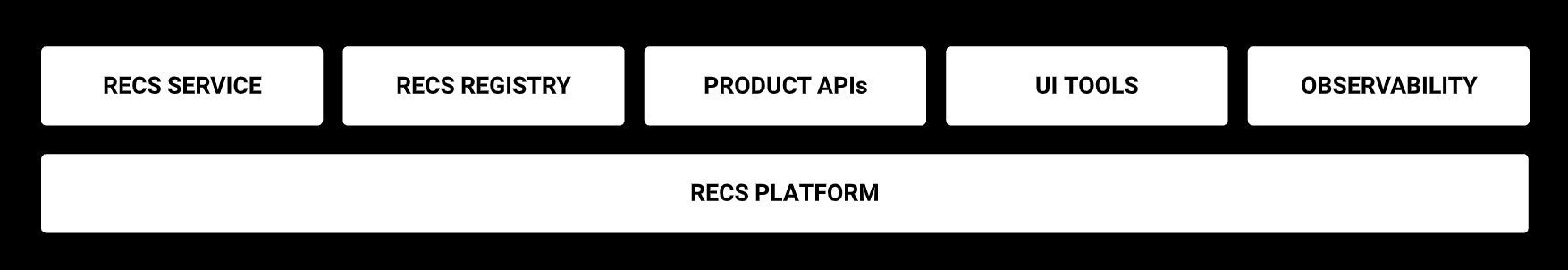

To streamline our serving infrastructure and remove the need for bespoke implementations, we built a central two-pass ranking service that allows teams to generate recommendations using a common set of building blocks.

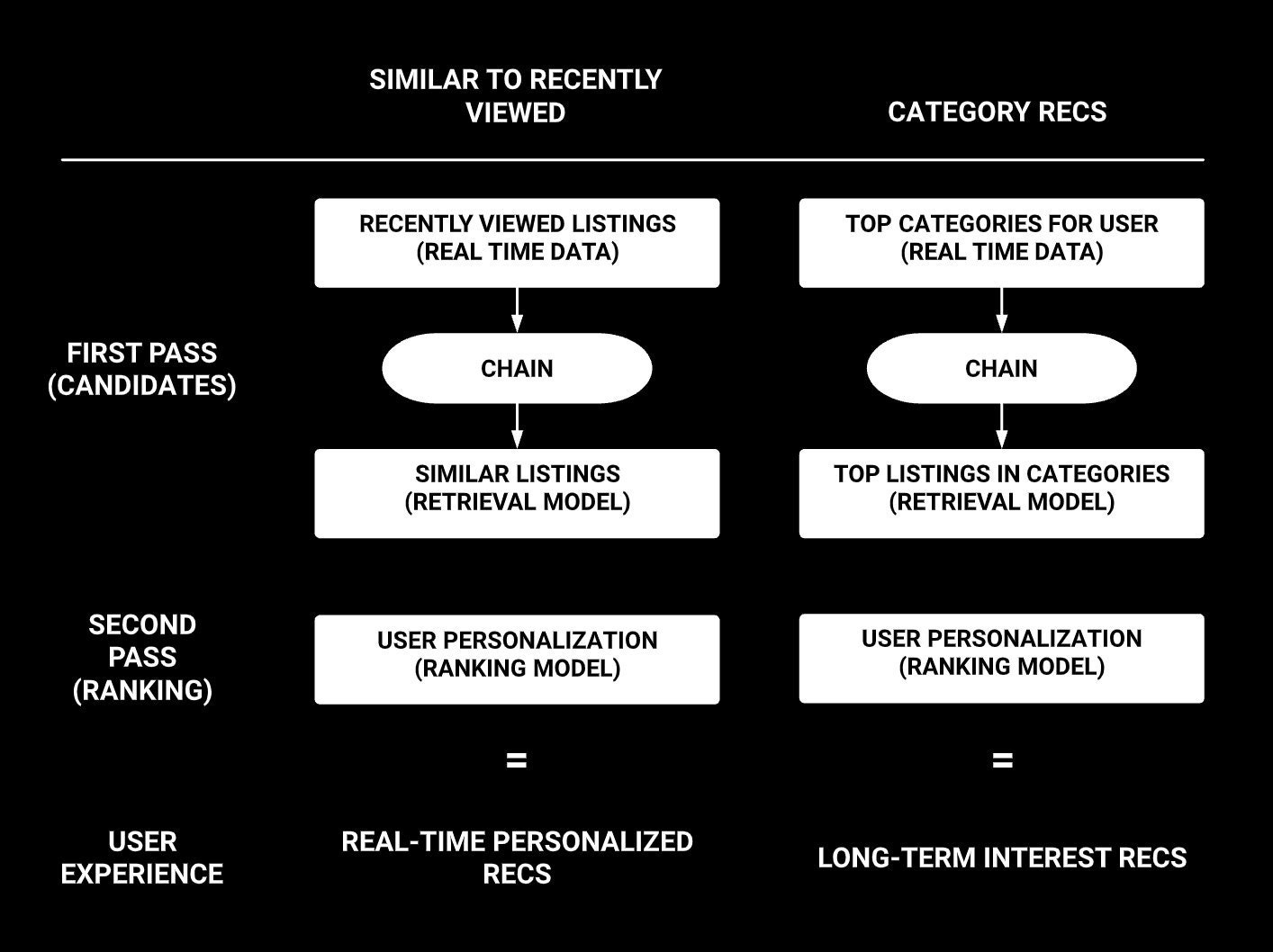

These building blocks fall into three main buckets:

- real-time services for fetching real-time user activity data,

- a set of retrieval ML models for finding the right candidates (e.g. nearest-neighbor indexes),

- and a set of ranking ML models for finding the best items from a set of candidates and personalizing the recommendations.

Producing great recommendations often requires combining different pieces. As part of our central service, we built simple mechanisms for chaining building blocks. Chaining is a powerful mechanism that allows us to create personalized user experiences, such as recommending items that are similar to your recently viewed items or recommending items in your favorite categories.

When it comes to technical choices, we decided to extend our in-house RPC framework, which is based on Finagle (Scala) and provides great abstractions for composing network RPC calls, making it easy to build highly concurrent services.

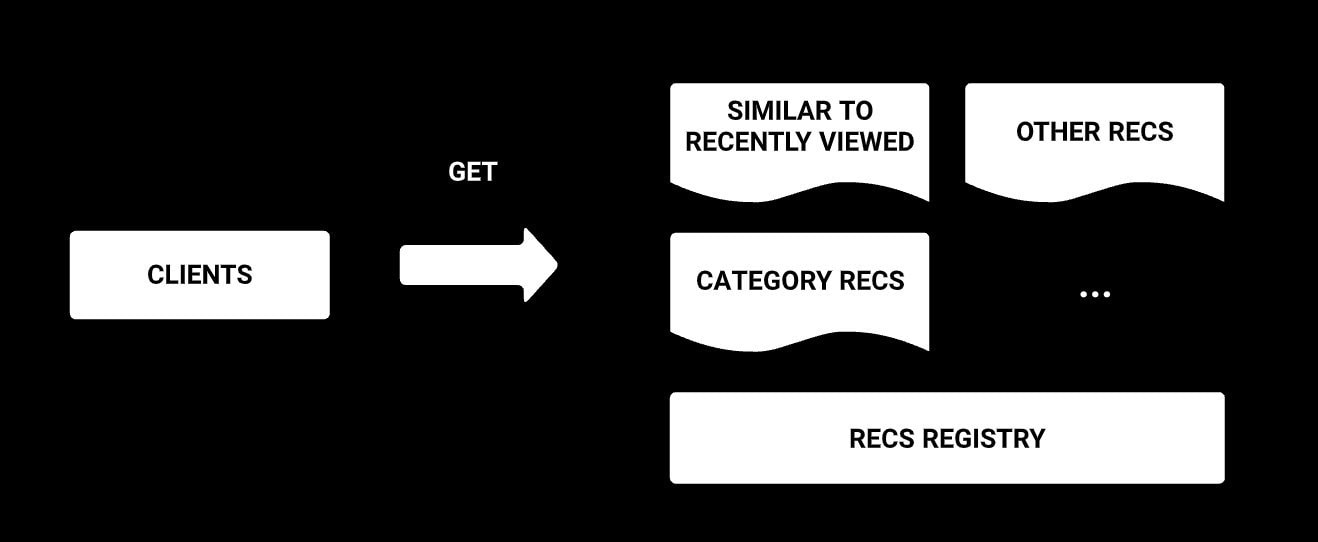

In terms of consumer interfaces, all recommendations get exposed through a single and consistent API acting as a registry of all available recs–aka the Recs Registry.

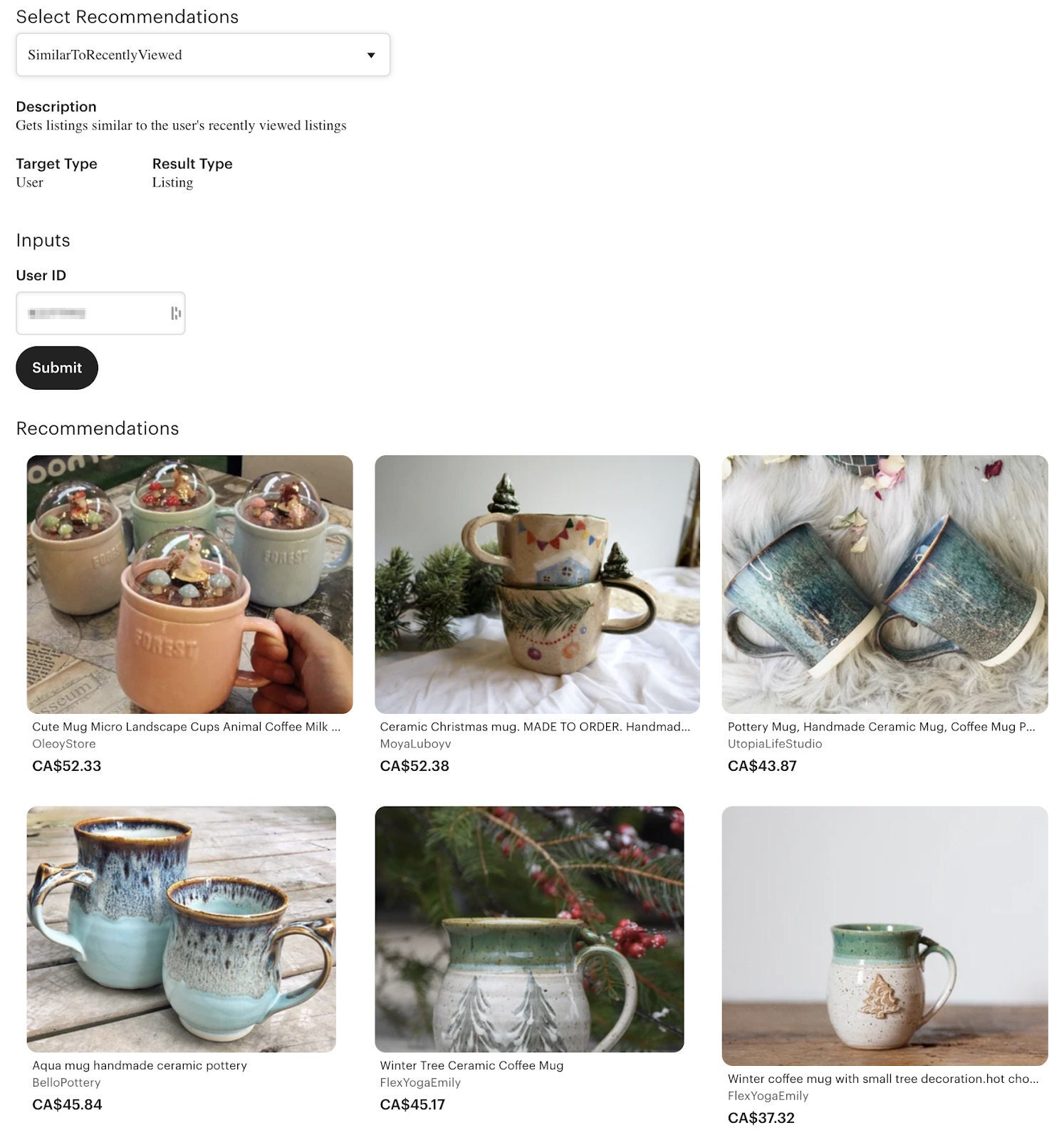

At Etsy, one of our guiding principles is to minimize waste. If a single ML model can be trained and used for multiple experiments, we encourage re-using over re-building. However, we also know that finding the right model for a given experiment can be hard. To mitigate this problem, we built an internal user interface that exposes all recommendations from the Recs Registry and allows product teams to browse available recommendations and inject them across different product experiences.

Last but not least, to make it easy to debug and productize recommendations, we built a set of dashboards that engineers can use to visualize the performance of their recommendations and adjust hyper-parameters as needed.

Future work

Building a platform is a continuous effort, and even though we’ve learned and built a lot, we know that there are still many challenges ahead of us. Some examples include generating recommendations directly in the UI, enabling new experimentation techniques such as multi-armed bandits, or pushing the limits of how much data we can process when making predictions.

Finally, I want to say a big thanks to everyone who worked on evolving these systems throughout the years: Panpan Song, Stan Schwertly, Davis Kim, Cristina Colón, Amanda daSilva, Tanner Whyte, Andrew D'Amico, Chris Ernst, Sheila Hu, Ruixi Fan, Bai Xie, Matt Gedigian and Miguel Alvarado.