How We Built A Context-Specific Bidding System for Etsy Ads

Etsy Ads is an on-site advertising product that gives Etsy sellers the opportunity to promote their handcrafted goods to buyers across our website and mobile apps.

Sellers who participate in the Etsy Ads program are acting as bidders in an automated, real-time auction that allows their listings to be highlighted alongside organic search results, when the ad system judges that they’re relevant. Sellers only pay for a promoted listing when a buyer actually clicks on it.

In 2019, when we moved all of our Etsy Ads sellers to automated bid pricing, we knew it was important to have a strong return on the money our sellers spent with us. We are aligned with our sellers in our goal to drive as many converting and profitable clicks as possible. Over the past year, we’ve doubled down on improvements to the bid system, developing a neural-network-powered platform that can determine, at request time, when the system should bid higher or lower on a seller’s behalf to promote their item to a buyer. We call it contextual bidding, and it represents a significant improvement in the flexibility and effectiveness of the automation in Etsy Ads.

First, Some Background

In the past, Etsy Ads gave sellers the option to set static bids for their listings, based on their best estimate of value. That bid price would be used every time one of their listings matched for a search. If a seller only ever wanted to pay 5 cents for an ad click, they could tell us and we would bid 5 cents for them every time.

The majority of our sellers didn’t have strong opinions about per-listing bid prices, and for them we had an algorithmic auto-bidding system to bid on their behalf. But even for sellers who self-priced, most adopted a “set it and forget it” strategy. Their prices never changed, so our system made the same bid for them at the highest-converting times during the weekend as it did on, say, a Tuesday at 4 a.m. Savvier sellers adjusted their bids day-to-day to take advantage of weekly traffic trends. No matter how proactive they were, though, sellers were still limited because they lacked request-time information about searches. And they could only set one bid price across all ad requests.

Our data shows that conversion rates differ across many axes: hour-of-day, day-of-week, platform, page type. In other words, context. We migrated our Etsy Ads sellers to algorithmic bid pricing because we wanted to ensure that all of them, regardless of their sophistication with online advertising, had a fair chance to benefit from promoting their goods on Etsy. But to realize that goal, we knew we needed to build a system that could set context-aware bids.

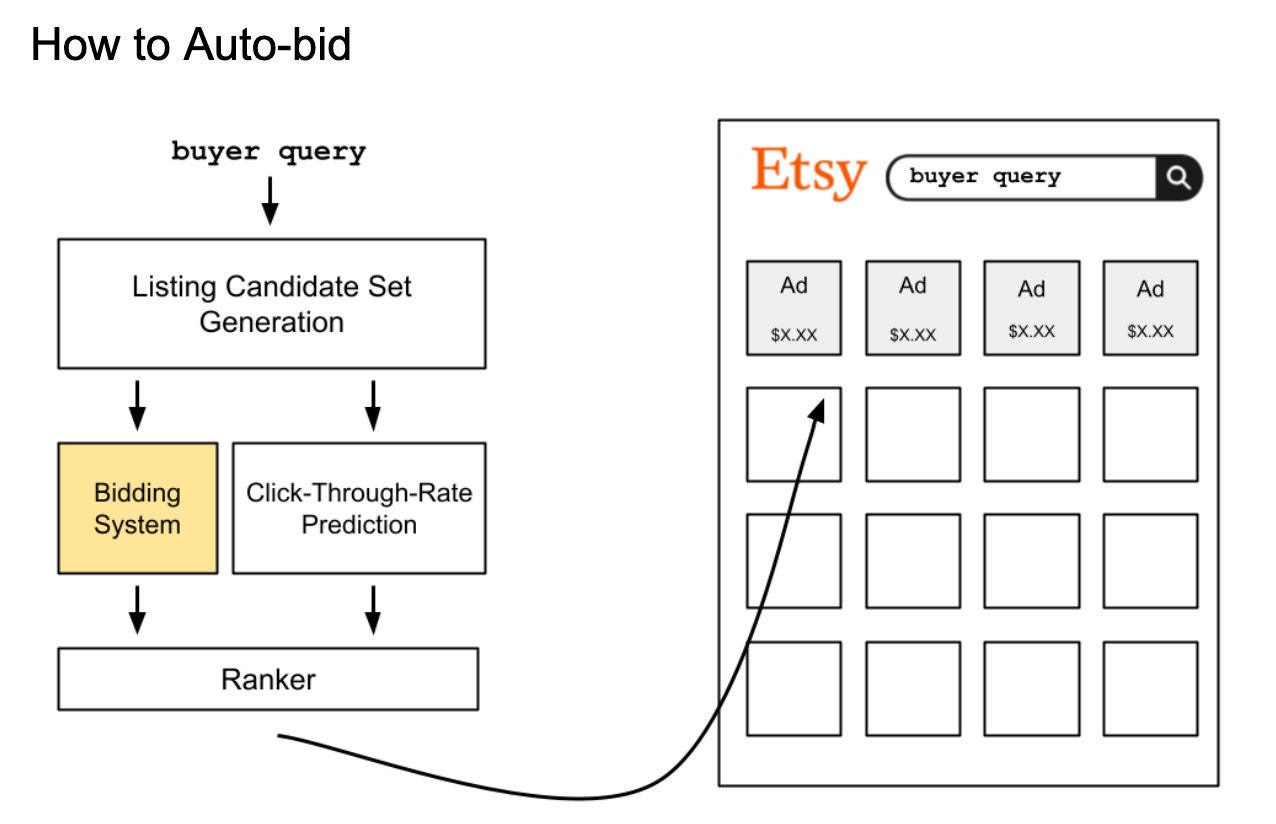

How to Auto-bid

Etsy Ads ranks listings based on their bid price, multiplied by their predicted click-through rate, in what’s known as a second-price auction. This is an auction structure where the winning bid only has to pay $0.01 above what would be needed to outrank the second-highest bidder in the auction.

In a second-price auction, it is in the advertiser’s best interest to bid the highest amount they are willing to pay, knowing that they will often end up paying less than that. This is the strategy our auto-bidder adopts: it sets a seller’s bid based on the value they can expect from an ad click.

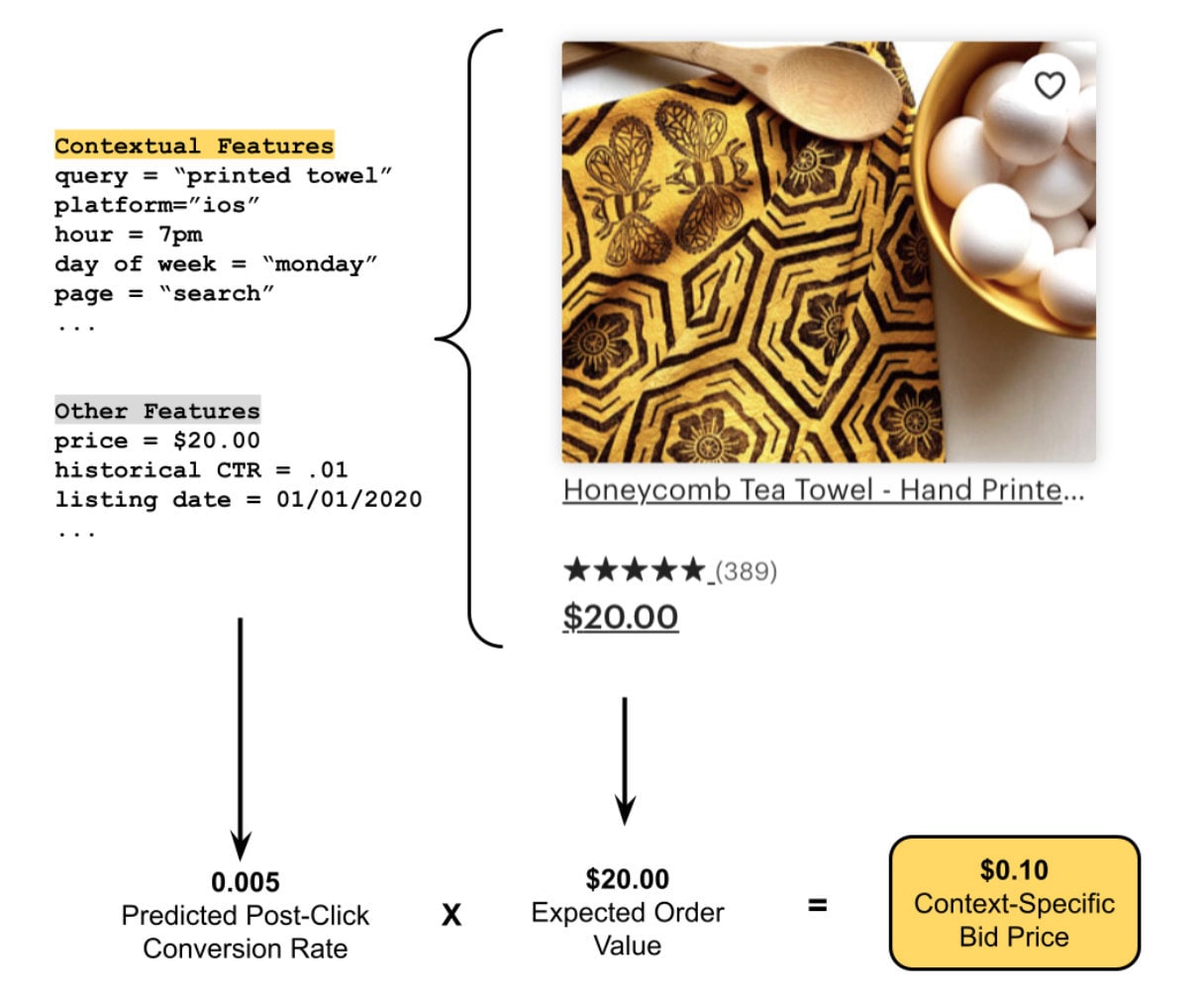

Expected Value of an Ad Click = Predicted Post-Click Conversion Rate x Expected Order Value

To understand the intuition behind this formula, consider a seller who has a listing priced at $100. If, on average, 1 out of every 10 buyers who click on their listing end up purchasing it, the seller could pay $10 per click and (over time) break even. But of course we do not want our sellers just to break even, we want their ads to generate more money in sales than the advertising costs. So the auto-bidder calculates the expected value of an ad click for each listing and then scales those numbers down to generate bid prices.

Bid = Expected Value of an Ad Click * Scaling Factor

At a high level, we are assigning a value to an ad click based on factors like the amount of sales that click is expected to generate..

Obviously, there’s a lot riding on that predicted conversion rate. Even marginal improvement there makes a difference across the whole system. Our old automated bidder was, in a sense, static: it estimated the post-click conversion rate for each listing only once per day. But a contextual bidder, with access to a rich set of attributes for every request, could set bids that were sensitive to how conversion is affected by time of day, day of week, and across platforms. Most importantly, it would be able to take relevance into account, bidding higher or lower based on factors like how the listing ranked for a buyer’s search (all in a way that respects users’ privacy choices.) And it would do all that in real time, with much higher accuracy, thousands of times a second.

Example of a context-specific bid for a “printed towel” search on an iOS device. This listing matched the query, and given its inputs our model predicts that 5 of 1000 clicks should lead to a purchase. With an expected order value of $20, the bid should be around $0.10. The adaptive bid price helps sellers win high purchase-intent traffic and saves budget on low purchase-intent traffic.

Better PC-CVR through Machine Learning

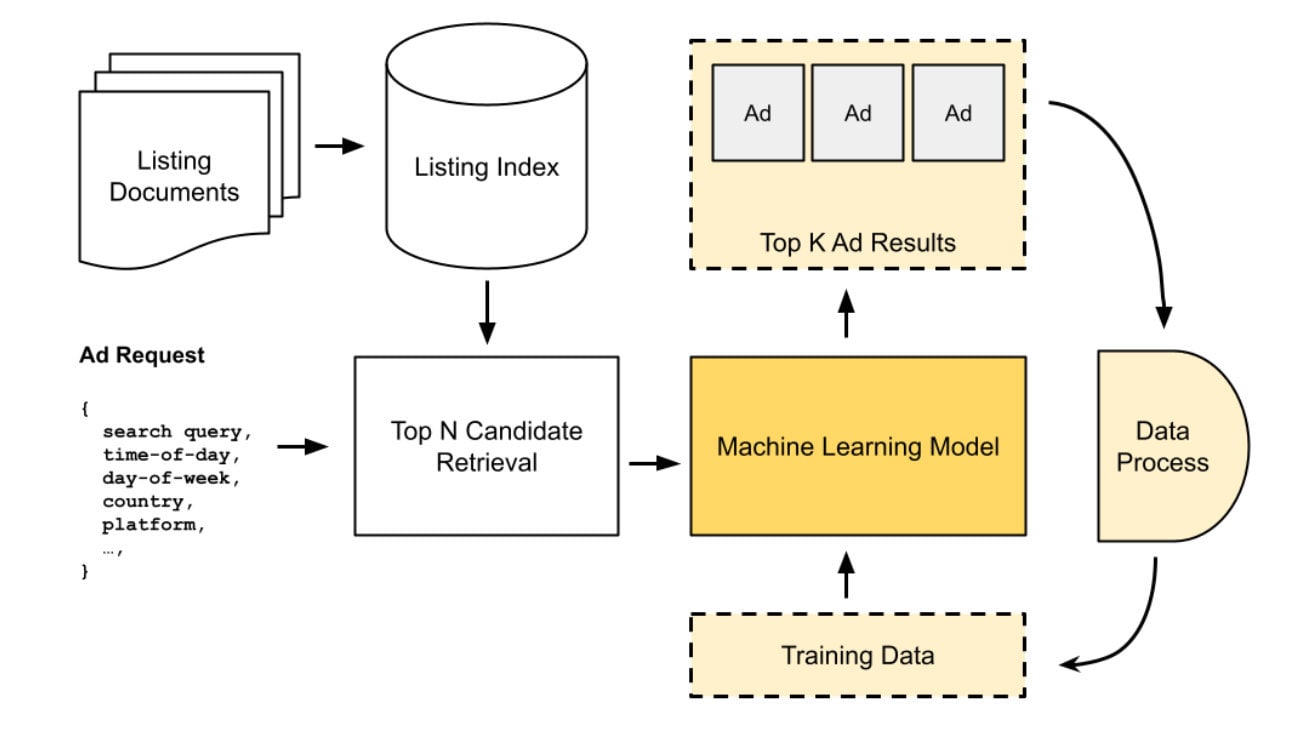

To power our new contextual bidder, we adopted learning-to-rank, a widely applied information-retrieval methodology that uses machine learning to create predictive rankings.

On each searchad request, our system collects the top-N matched candidate listings and predicts a post-click conversion rate for each of them. These predictions are combined with each listing’s expected order value to generate per-listing bids, and the top k results are sorted and returned. Any clicked ads are fed back into our system as training data, with a target label attached that indicates whether or not those ads led to a purchase.

The first version of our new bidding system to reach production used a gradient-boosted decision tree (GBDT) model to do PC-CVR prediction. This model showed substantial improvements over the baseline non-contextual bidding system.

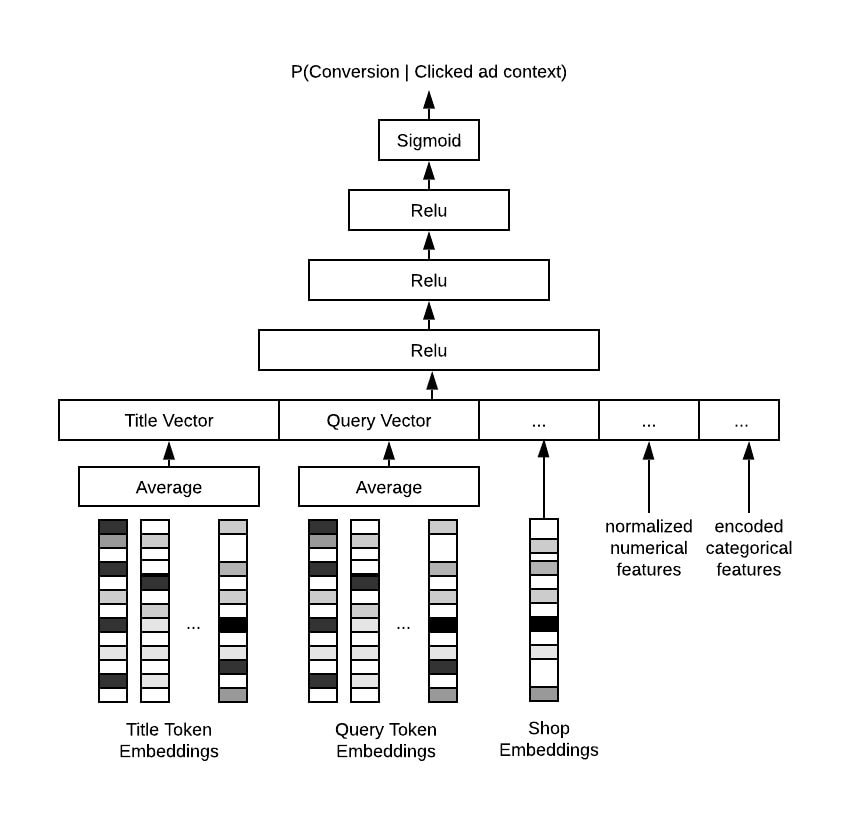

After we launched with our GBDT-based contextual bidder, we developed an even more accurate model that used a neural network (NN) for PC-CVR prediction. We trained a three-layer fully-connected neural network that used categorical features, numeric features, dense vector embeddings and pre-trained word embeddings fine-tuned to the task. The features are both contextual (e.g. time-of-day) and historical (e.g. 30-day listing purchase rate).

Evaluating Post-Click Conversion Rate

It’s not always easy to evaluate the quality of the predictions of a machine-learning model. The metrics we really care about are “online” metrics such as click-through-rate (CTR) or return-on-ad-spend (ROAS). An increase in CTR indicates that we are showing our buyers listings that are more relevant to them. An increase in ROAS indicates that we are giving our sellers a better return on the money they spend with us.

The only way to measure online metrics is to do an A/B test. But these tests take a long time to run, so it is beneficial to have a strong offline evaluation system that allows us to gain confidence ahead of online experimentation. From a product perspective, offline evaluation metrics can be used as benchmarks to guide roadmaps and ensure each incremental model improvement translates to business objectives.

For our post-click conversion rate model, we chose to monitor Precision-Recall AUC (PR AUC) and ROC AUC. The difference between PR AUC and ROC AUC is that PR AUC is more sensitive to the improvements for the positive class, especially when the dataset is extremely imbalanced. In our case, we use PR AUC as our primary metric since we did not downsample the negative examples in our training data.

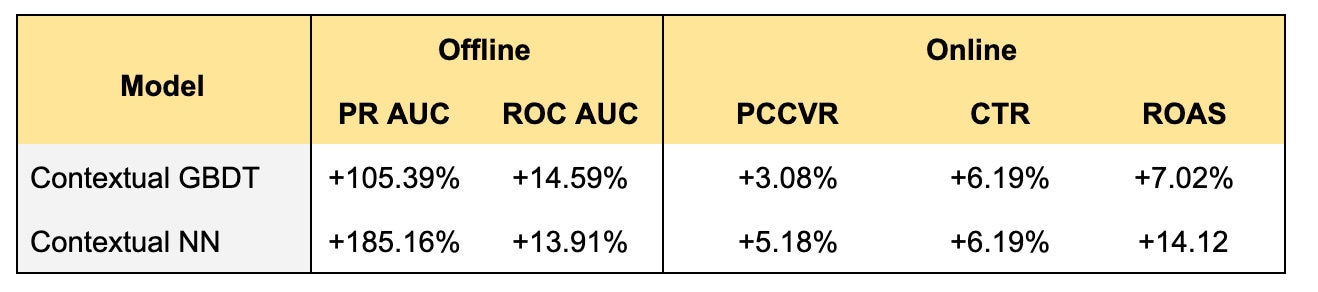

Below we’ve summarized the relative improvements of both models over the baseline non-contextual system.

The GBDT model outperformed the non-contextual model on both PR AUC (+105.39%) and ROC AUC (+14.59%). These results show that the contextual features added an important signal to the PC-CVR prediction. The large increase in PR AUC indicates that the improved performance was particularly dramatic for the purchase class.

The online experimental results were also promising. All parties (buyers, sellers and Etsy) benefited from this new bidding system. The buyers saw more relevant results from ads (+6.19% CTR), the buyers made more purchases from ads (+3.08% PCCVR) and the sellers received more sales for every dollar they spent on Etsy Ads (+7.02% ROAS).

The neural network model saw even stronger offline and online results, validating our hypothesis that PR AUC was the most important offline metric to track and reaffirming the benefit of continuing to improve our PC-CVR model.

Transforming Post-Click Conversion Predictions to Bid Prices

We were very pleased with the offline performance we were able to get from our PC-CVR model, but we quickly hit a roadblock when we tried to turn our PC-CVR scores into bid prices.

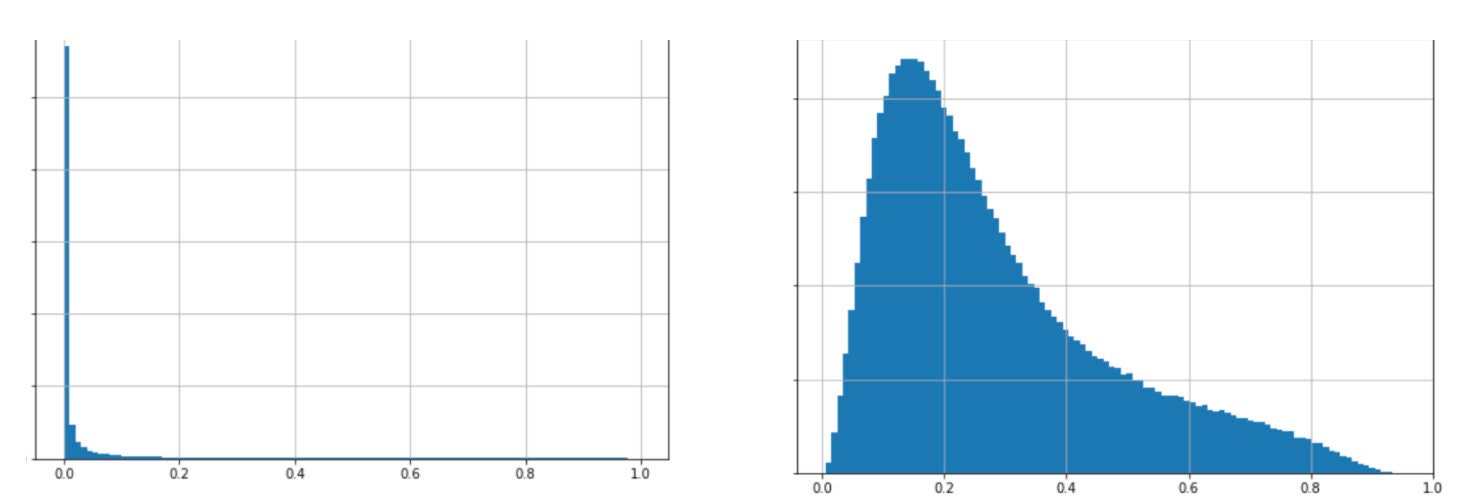

As the figure on the left shows, the distribution of predicted PC-CVR scores is right skewed and has a long tail. This distribution of scores is not surprising, given that our data is extremely imbalanced and sparse. But the consequence is that we cannot use the raw PC-CVR scores to generate our bid prices because the PC-CVR component would dominate the bidding system. We do not want such gigantic variances in bid prices and we never want to charge our sellers above $2 for a click.

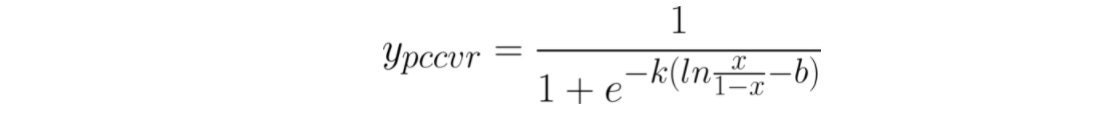

To solve these issues, we refitted the logit of predicted PC-CVR with a smoother logistic regression. Below is the proposed function:

where x is predicted post-click conversion rate, k and b are hyperparameters. Note that if k=1 and b=0, this function is the identity function.

We set the k and _b _parameters such that the average bid price was unchanged from the old system to the new system. While we did not guarantee that each seller’s bid prices would remain consistent, at least we could guarantee that the average bid prices would not change. The final distribution is shown in the right-hand graph above.

Conclusion

One topic we didn’t dive into in this post is the difficulty of experimenting with our bidding system. For one thing, real money is involved whenever we run an A/B test which means that we need to be very careful about how we adjust the system. Nor is it possible to truly isolate the variants of an A/B test because all of the variants use the same pool of available budget. And once we changed our bidding system to use a contextual model, we moved from having to make batch predictions once a day to having to make online predictions up to 12,000 times a second.

We’re planning a follow-up post on some of the machine-learning infrastructure challenges we faced when scaling this system. But for now we’ll just say that the effort was worth it. We took on a lot of responsibility two years ago when we moved all our sellers to our automated bidding system, but we can confidently say that even the most sophisticated of them (and we have some very sophisticated sellers!) are benefitting from our improved bidding system.